Theme: Research, Collaborating with industry for teaching and learning, Knowledge exchange

Theme: Research, Collaborating with industry for teaching and learning, Knowledge exchange

Author: Prof Balbir Barn (Middlesex University), Prof Tony Clark (Aston University), Vinay Kulkarni (TCS) and Dr Souvik Barat (TCS)

Keywords: Digital Twin, Model Driven Engineering, Inclusive Innovation

Abstract: Researchers at Middlesex University initiated a collaboration in 2011 with Tata Consultancy Services Research in India based on their research on lightweight methods for enterprise modelling. Since 2014, that initial introduction has developed into a sustained and ongoing collaborative research programme in programming languages and environments to support model based decision making in complex and uncertain scenarios. The research programme has supported annual sabbatical visits to the TCS research labs in India; a PhD studentship; and regular workshop/advanced tutorials at international conferences. The continuing programme is an example of industry based research problems driving academic collaboration in an international context that has led to over 30 research outputs, an Impact Case Study submitted to REF2021, a TCS software product and the establishment of the London Digital Twin Research Centre at Middlesex.

Introduction

This case study describes the outcomes of an ongoing collaboration between Middlesex University with Tata Consultancy Services Research, India’s premier software research centre. The collaboration initiated in 2011, was triggered by a research paper published by Clark, Barn and Oussena [3]. The research proposed a precise, lightweight framework for Enterprise Architecture that views an organization as an engine that executes in terms of hierarchically decomposed communicating components. Following a visit to the TCS Research Labs (TRDDC) in Pune, India, a joint research programme between TCS and Middlesex was established to further the notion of the “Model Driven Organisation”. A key feature of the collaboration was the notion of inclusive innovation, from problem location to shared mutual benefits. The research programme has supported annual sabbatical visits to the TCS research labs in India; a PhD studentship; and regular workshops/advanced tutorials at international conferences. The continuing programme is an example of industry-based research problems driving academic collaboration in an international context that has led to over 30 research outputs, an Impact Case Study submitted to REF2021, a TCS software product and the establishment of the London Digital Twin Research Centre at Middlesex.

Systemising a model for collaboration

In 2011, developing strong, sustained and inclusive model of collaboration with industry was seen as an important element of reputation building activities for Middlesex University as it set out to establish an overseas campus in India. The goal was that Middlesex should be seen to delivering impact both to project outcomes but also as value to the geographical setting of the collaboration. Thus, in 2011, two senior academics, Prof. Balbir Barn and Prof Tony Clark embarked on a visit to India’s leading IT research centres including the Tata Research and Development Centre (TRDDC), IBM Research, Microsoft Research, Accenture Research, HCL Research, Infosys, Cognizant and others. At these visits, the senior academics were able to showcase Middlesex Computer Science research activities leading to two memorandums of cooperation with Accenture and TRDDC. Middlesex CS had also decided to establish a strong presence at India’s premier Software Engineering conference(ISEC) through research papers, tutorials, and the organising of workshops aimed at capacity building of Indian academia (Value in the process).

Further meetings with chief scientist – Vinay Kulkarni from TRDDC in 2012 at ISEC, led to the idea of collaboration around the notion of the “Model Driven Organisation” where an enterprise can be represented symbolically by a model that draws its information/data from range of software artefacts used by the enterprise in its daily operations. Executives are then able to use this model representation as a decision-making aid.

The collaboration was seen as a shared vision that would be beneficial to both partners (TRDDC and MDX) so at the outset, we agreed to make our joint research publicly available with both partners retaining the option to productise any research outputs. However, there was This collaboration can also be seen as a model for Inclusive Innovation in that the research roadmap references a problem from the “wild”, where key stakeholders are engaged equally from research problem formulation, through to research publications and where there are mutual benefits.

The collaboration also developed a way of working that was critical to its subsequent success. TRDDC supported travel and subsistence of Barn and Clark to its research labs in Pune on annual two week “mini-sabbaticals”. These visits which have run since 2012 to now (only coming to pause due to COVID-19) are linked to the ISEC conference where papers, tutorials and workshops have been regularly presented. There has been a strong focus on development of young academics in India at this conference, further establishing the impact of our inclusive innovation approach by generating value in the setting. While the primary interaction is with the TRDDC Software Engineering Laboratory, seminars and other research exploration opportunities are made possible by meetings with other laboratories (such as Psychology). Some of the annual meetings have been supplemented by further meetings at Middlesex. Each annual visit is an intensive research meeting from which emerges the research plan for the year alongside a publication and impact plan. Very early on, we recognised the potential for an impact case study for the periodic research evaluation exercise conducted in the UK.

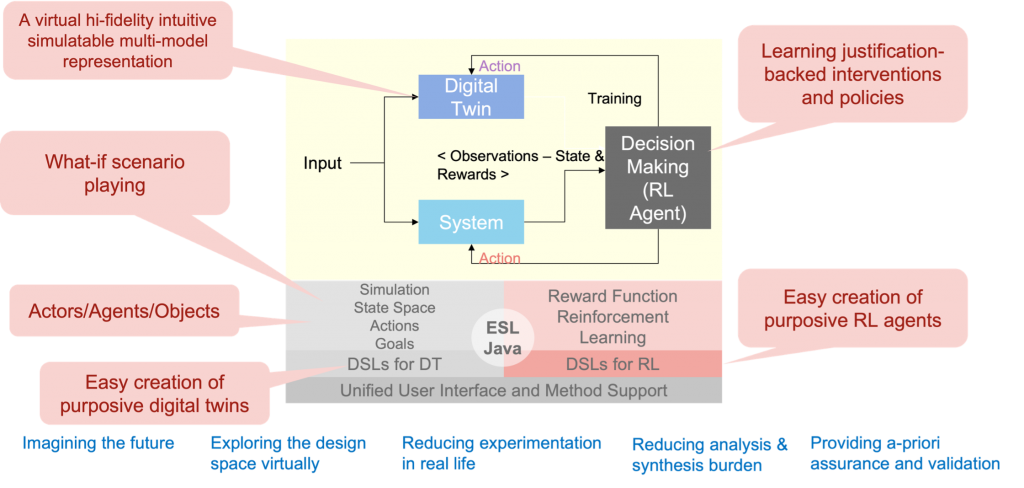

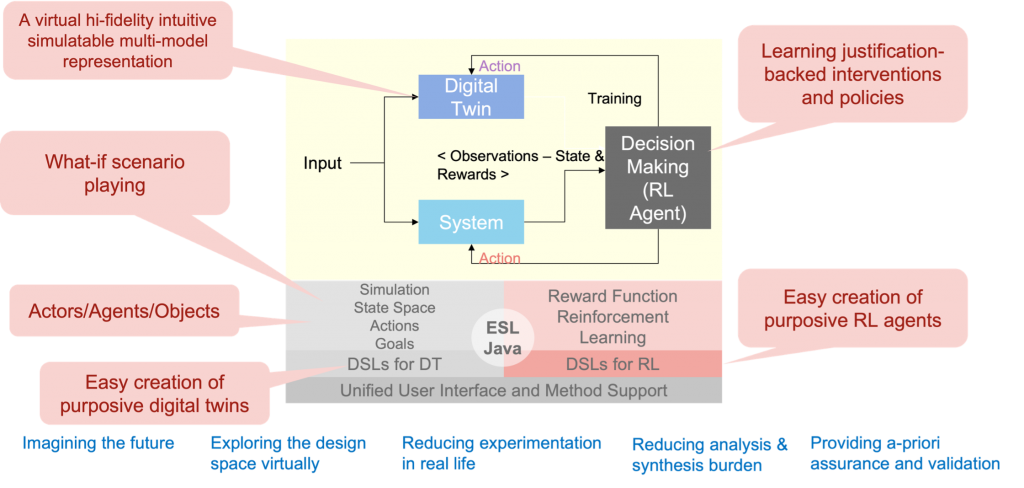

Figure 1: Research Roadmap

Outcomes

The collaboration has proved to be singularly successful in delivering concrete outcomes. Our regularly updated research roadmap (see Figure 1.) has evolved from our initial concept of the Model Driven Organisation, through to a practical language (ESL) and execution environment for enterprise simulation and now to advances to methodologies for digital twin design.

Along the way, a TCS Research Scientist (Souvik Barat) has completed a doctoral study in the design of a modelling language to support enterprise decision making. This language would later contribute to work by Dr Souvik Barat to design a sociotechnical digital twin of the City of Pune, to support non-pharmaceutical interventions during the Covid-19 pandemic.

The ESL Language (lead Prof Tony Clark) developed as a TRL-5 prototype through the collaboration has formed the basis of a TCS TwinX™ software product developed by TCS and is now being used by TCS consulting.

The collaborative research programme has generated over 30 research publications at leading computing conferences and journal publications. Representative publications are listed [2,4,5,6]. The team has also generated impact and knowledge transfer through the production of advanced tutorials and workshops at conferences. The collaboration has also produced an edited book [7].

Recognising the importance of outcomes to the two respective organisations, the research has contributed to executing the research strategy of TCS Research (see strategy document) and has led directly to an impact case study submitted to REF2021.

Further value derived from our inclusive innovation approach has led to developing research publication preparation skills at TCS and even wider social impact through the pandemic planning activities in Pune City [1]. See the video: https://www.youtube.com/watch?v=x48G7-bOvPY).

In 2019, as our research work has steadily shifted towards Digital Twin technologies, Middlesex established the London Digital Twin Research Centre (LDTRC). The centre combines the software engineering research with cyber-physical systems and telecommunications research to present a means of showcasing a range of externally funded Digital Twin research projects. The focus of the centre has been brought to the attention of EPSRC and it holds regular business facing workshops.

Lessons learnt

Developing a strategic collaboration requires: investment from universities; a spirit that places collaboration and not competition at its heart, and willingness from academics to look for long-term benefit. Two senior academics spent three weeks touring Indian IT research labs with no guarantee of success. Hence, alignment with university strategy is critical.

Systemising this model of cooperation should be considered a strategic objective of UK Research and Innovation. A recognition that such success can be found in all our universities is imperative. While the EPSRC and RAE have “visiting academic-industrial collaborator” schemes they could generate much greater outcomes if their scale was smaller and they were genuinely accessible to all academics at all institutions.

References

- Barat, Souvik, Ritu Parchure, Shrinivas Darak, Vinay Kulkarni, Aditya Paranjape, Monika Gajrani, and Abhishek Yadav. “An Agent-Based Digital Twin for Exploring Localized Non-pharmaceutical Interventions to Control COVID-19 Pandemic.” Transactions of the Indian National Academy of Engineering 6, no. 2 (2021): 323-353.

- Barat, S., Kulkarni, V., Clark, T., Barn, B. (2019) An Actor Based Simulation Driven Digital Twin for Analyzing Complex Business Systems. Proceedings of the 2019 Winter Simulation Conference, 2019, Maryland, USA.(doi: 10.1109/WSC40007.2019.9004694)

- Clark, T., Barn, B.S. and Oussena, S., 2011, February. LEAP: a precise lightweight framework for enterprise architecture. In Proceedings of the 4th India Software Engineering Conference (pp. 85-94). ACM. (doi:10.1145/1953355.1953366)

- Clark, T., Kulkarni, V., Barn, B., France, R., Frank, U. and Turk, D., 2014, January. Towards the model driven organization. In 2014 47th Hawaii International Conference on System Sciences (pp. 4817-4826). IEEE. (doi:10.1109/HICSS.2014.591)

- Clark, T., Kulkarni, V., Barat, S. and Barn, B., 2017, June. ESL: an actor-based platform for developing emergent behaviour organisation simulations. In International Conference on Practical Applications of Agents and Multi-Agent Systems (pp. 311-315). Springer, Cham. (doi: https://doi.org/10.1007/978-3-319-59930-4_27 )

- Kulkarni, V., Barat, S., Clark, T. and Barn, B., 2015, September. Toward overcoming accidental complexity in organisational decision-making. In 2015 ACM/IEEE 18th International Conference on Model Driven Engineering Languages and Systems (MODELS) (pp. 368-377). IEEE. (doi:10.1109/MODELS.2015.7338268)

- Kulkarni, Vinay and Sreedhar Reddy, Tony Clark, and Balbir S. Barn, eds. Advanced Digital Architectures for Model-Driven Adaptive Enterprises. Hershey, PA: IGI Global, 2020. https://doi.org/10.4018/978-1-7998-0108-5

Any views, thoughts, and opinions expressed herein are solely that of the author(s) and do not necessarily reflect the views, opinions, policies, or position of the Engineering Professors’ Council or the Toolkit sponsors and supporters.